Talking about sugar

Nick and Maria want to talk about sugar. Does it reduce lifespan? They disagree, but are honest and principled. They want to find the truth and agree on it.

How should they talk? Take turns? Try hard to be polite and respectful? Allow interruptions? Search for origins of disagreement? Areas of agreement? Make bets? Get a moderator?

Or maybe Nick and Maria want to talk about tax-exemption for churches. It might be hard to get consensus, since this is a matter of opinion. Still, they’d like to reduce their disagreement to different underlying values. What should they do? Repeat the other’s argument? Tell personal stories? Run ideological Turing tests?

Do conversations have known best practices? How much do they improve the odds of landing on the truth?

Truth vs fitness

In East Africa 70,000 years ago, humans made their first steps towards language. This didn’t happen for truth, it happened to increase reproductive fitness. Overly open-minded people were probably easy to manipulate. Scrupulously honest people were probably bad at manipulating. Many false beliefs still enhanced reproductive fitness (typically by improving cooperation).

So, one might argue, of course we are terrible at finding truth via conversation! Why are we surprised that our instincts are bad at something we never evolved to be good at?

Cialdini

Doing some research, Maria finds the work of Robert Cialdini, who says we are persuaded by reciprocity, scarcity, authority, consistency, liking, and consensus. But these don’t seems useful to Maria. They are mostly “dark patterns” of persuasion, that work for anything regardless of its truth.

Instead, are there “non-dark” patterns of persuasion, that work well for true stuff but not for false stuff?

Reverse Cialdini

Over a few months, Nick and Maria have a dozen of these conversations. The results are exactly what you’d expect: No one ever changes their mind about anything. Maria has a feeling she can’t be right about everything. But try as she might, she can’t find a case where Nick’s arguments convince her.

Eventually, she has a strange idea. Maybe she should fight fire with fire, cognitive-bias wise. She decides that in each discussion they should both intentionally subject themselves to as much dark-pattern manipulation as possible. Her theory is that there is an “energy barrier” that prevents them from getting into a mind-space where it’s even possible to appreciate the other position fairly.

By temporarily brainwashing themselves a little bit, can they can actually give the other position a fair chance?

Skepticism

It’s reasonable to be skeptical about the above ideas. Humans have been talking for 70,000 years. If there was a trick to talking better, wouldn’t we have found it already? I showed some of the above thoughts to a friend, who responded, “LOLOLOLOLOL, dynomight, you sweet wide-eyed gazelle. The rare Nicks and Marias of the world do great already. The problem is dishonest, unprincipled people.”

So perhaps better conversation can’t save the world. Still, I think a different type of discourse has much more room for improvement: That in online forums. It sounds hyperbolic, but I genuinely believe this could move the needle on the future of humanity.

Just think about it. The world is a complex place. Want to understand the effects of the minimum wage? The long-term trajectory of population growth? The effects of vitamin C? No one human brain can process all the relevant information for such questions. But shouldn’t groups of brains, if we could “network” them properly, have much greater capabilities?

Dimensions of forums

Online forums have various design dimensions.

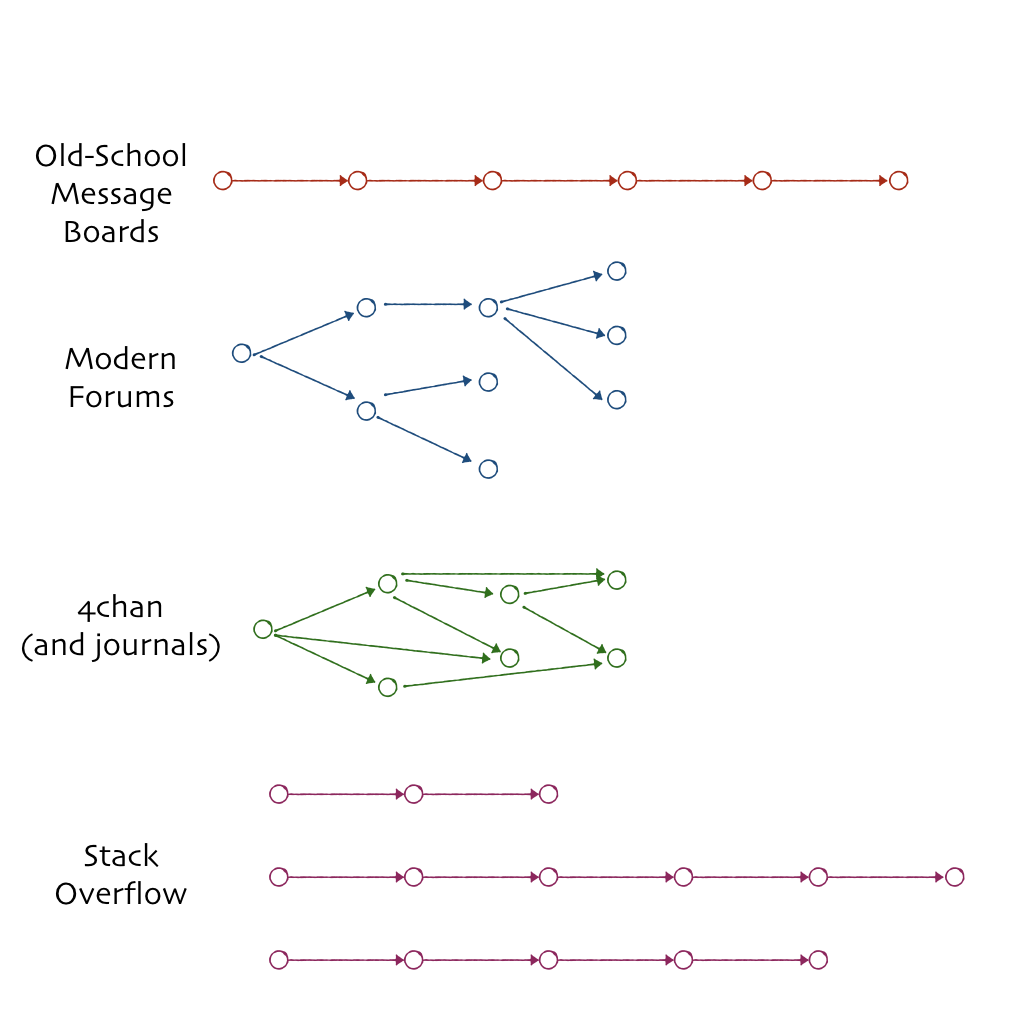

- Conversational graphs. An old-school message board is a chronological list. Modern forums (Reddit, LessWrong) represent comments in a reply tree. On 4chan, a directed acyclic graph of replies is overlaid on the chronological list: Someone can reply to multiple messages, and pointers are provided at both ends to move around the graph that way. In Stack Overflow, answers are ordered by votes, but comments on answers are chronological.

- Voting. Some don’t use it at all (old-school boards). Some order everything by votes (Reddit). Some use votes to order some levels but not others (Stack Overflow).

- Who can put content where? On Twitter, anyone can throw their replies up on anyone else’s content. It would be a very different place if you could only do this if that person followed you.

- Moderation. This varies from Moderate to Crush the Human Spirit (Stack Overflow) to Moderate When Alternative is Prison (4chan).

It’s hard to understand the influence of most of these choices, since popular forums vary along many dimensions at the same time. Most forums today are optimized for the goal of “make forum owners rich.” We don’t know the decision-making process, or what tradeoffs would be made with different goals.

I don’t think it’s possible to sit around and figure out what effects a given forum design will have. Human beings and social behavior are too complex. We need to systematically test the different designs, and see what actually happens empirically. Is anyone doing that?

Ideas for forums

Here are some ideas:

- Sometimes people have useful ideas, but give them in a long, boring, hard to read form. Can we allow users to edit each others’ content?

- “Flattening” comments into a linear order is always a distortion. Why not learn-in to the idea of a full conversational graph, with some kind of visualization that allows people to elegantly navigate it?

- Inside a conversation are many interesting sub-conversations. Can users create “curated views” to highlight the best comments in one sub-conversation?

- We say we want beauty and truth and to be better people. But we can’t resist cat videos and the latest political outrage. Can we allow users to “Ulysses nudge” themselves, by choosing the kind of content they wish they enjoyed? Let the algorithm find a way to try to manipulate the user into doing what the user’s “better self” wants.

- Forums are great ways to share knowledge. Prediction markets are also a great way to combine knowledge. Can we find an effective way to combine the two?

These ideas are all probably terrible. I’m just trying to say that there’s a lot of possibilities, and some of them are surely good.

Suppose we could look 30 years into the future. Forums will no doubt look very different. Probably some of the differences rely on exogenous technological innovation. But surely some differences could be “back-ported” to today, if only we knew what they were. What are they, and why is the pace of innovation in online forums so slow right now?

Journals as steampunk forums

If we want a historical example of “public forum with rules and norms carefully derived to find truth” I think the best we can do is the system of journal publications. For all their imperfections, these have done a decent job of uncovering truth for several hundred years. What lessons do these offer?

I think for our purposes, the three biggest differences vs. online forums today are:

First, journals have a crazy focus on credit attribution. There is a formalized system of citations. Reviewers check that the claimed new ideas in papers really are new, and that credit (citations) have been provided to all (most? some?) related work. One paper can reply (cite) to many other papers. (Journals share this with 4chan!)

Second, journals provide strong quality signals coupled to heavy “moderation”. In most fields there is a fairly clear status hierarchy. The moderators (reviewers/editors) at top journals spend a lot of effort moderating, and are very picky about what they accept. This provides strong signaling for the papers that are accepted.

Third, there are extremely strong external incentives for people to appear in the top journals. (Ultimately, jobs and money are on the line.)

There other differences (e.g. journals are slow and cost money) but I think the three above are the most significant. What would happen if we added these to online forums today? Unfortunately, it’s a bit hard to say. These wouldn’t be easy to copy. The first is laborious, and the others are as much properties of the society the journal is embedded in as the journal system itself.

Failure modes

Maybe figuring out how to improve forums is hard. As a first step, maybe we can at least understand where things go wrong? Here’s some proposed failure modes.

The user death spiral. Some cool people start a forum and have cool conversations. Random trolls show up sometimes, but they are easily banned. Eventually, some not-quite-as-cool people find their way to the forum. They aren’t misbehaving and make some good points sometime. It feels tyrannical to ban them so no one does. Still, the coolest people become slightly more likely to drift away. New very-cool people become slightly less likely to join. Eventually the median shifts enough that barely-cool-at-all people are joining. Gradually, the average coolness of people in the forum decreases to zero.

The tyranny of the minority. Human experience is vast. There are people out there who truly, passionately believe that bestiality should be legal and accepted. Some are smart and compelling writers. Almost all forums block these people. You don’t, figuring that you believe in free speech, these people are a tiny minority, and the truth will emerge as they argue with the majority. Suddenly, in every thread people are findings connections to the “injustice” of the current prohibition on bestiality. Why? Because every other high-status place on the internet prohibited these people, and they’ve all been funneled to you.

The village becomes anonymous. In small forums, you see the same person repeatedly. This has two advantages: A) You know people will remember you, and you want them to be nice to you, so you try not to act like a jerk. B) After seeing the same people for a while, you have some context for their comments. The conversation can actually evolve and grow over time. After a certain number of users, each comment must stand alone. The forum has “amnesia”. Why be nice or clarify your argument when no one will remember what happened?

The dark shift. You run a nice happy forum, with a fixed set of users. One day, Maria is in a bad mood, and write a funny but slightly dismissive response. Since it’s funny, everyone laughs. The target of her comment resents it and eventually finds a way to score points back on Maria. Others notice and start imitating this behavior. These comments get more common, and then meaner. People become very cautious when posting, making sure they don’t leave an opening to attack. In fact, why make a constructive argument at all? Much easier to find someone on the other side and attack them! Nuanced discussion becomes impossible.

The Necessary Despot. You run a forum. It gets overrun with bestiality people. You ban them. The users start getting rude. You ban them. The banned users come back. You find sneaky ways to make them invisible and let them scream into the void instead. Some topics are popular but always lead to your users arguing. You ban those topics. The forum survives, but you work all the time enforcing your tyrannical-seeming constraints. Users grumble. You drink too much and worry the forum just isn’t that interesting anymore.

The tidal wave. You’re some kind of genius. No one knows how you do it, but your forum grows and grows while maintaining quality. Every comment is well-reasoned, polite, insightful, and hilarious. Still, things feel slightly out of control. Every thread has thousands of comments. The discussion naturally breaks up into different sub-topics. You notice that the same sub-topics emerge in different parts of a thread. These conversations, often focus on different data and- ominously- arrive at different conclusions. You try to focus threads better, confining discussion to smaller sub-topics. But the comments just keep coming. Each sub-topic is re-proposed again and again. Each thread breaks into sub-sub-sub-sub topics. Some users valiantly try to link related discussions, but they can’t keep up. You have growing doubts about the existence of objective truth and start having nightmares where hordes of comments start to spill over the walls of your bunker.

It’s fun to speculate about how badly different forums suffer from each of these modes. Here’s my totally subjective rankings of a few. (Here I’m picking on Marginal Revolution just as an example of “what always happens when an old-school blog with high quality content uses laissez-faire moderation.”)

| Failure Mode | Marginal Revolution | Hacker News | /r/slatestarcodex | |

|---|---|---|---|---|

| User-quality death spiral | 💀💀💀 | 𐄂 | 💀 | 𐄂 |

| Tyranny of the minority | 💀 | 𐄂 | 𐄂 | 𐄂 |

| Village becomes anonymous | 𐄂 | 💀 | 💀 | 💀 |

| Dark shift | 💀💀 | 𐄂 | 𐄂 | 💀💀💀 |

| Necessary despot | 𐄂 | 💀💀 | 💀💀 | 𐄂 |

| Tidal wave | 𐄂 | 💀💀 | 💀 | 💀💀💀 |

Are these the most common failure modes? I don’t know. And really, what are the answers to all the questions in this post? I don’t know! But we should keep re-asking important questions until we have answers.